Those who read my 2016 blog on CJ will know how interested I am in using CJ to improve our assessment in writing. Like other schools, we took part in the KS2 ‘Sharing Standards’ project. We were really pleased to be in a project where schools across the UK shared their Year 6 writing and have high hopes that it will be a vehicle for driving assessment strategies forward.

The difference to our first two internal writing assessments we have made using CJ was that we only assessed 30 children. However, the 30 children assessed had a portfolio (3 different pieces of writing in genre and form) to compare against. Whilst I understand the principle behind the thinking (a range of writing needs to be compared, not just one), for us it didn’t work as well. Here’s why…

Apples and Oranges

The first issue was that we weren’t comparing like for like. Some children had written a story, diary and report whilst others had submitted instructions, story and biographies. Even in the Mars and football example Chris Weadon often refers to, a report was compared to a story. Now whilst some may argue this shouldn’t matter, I would question how much prior information the ‘Mars’ child was given in order to write the report. Furthermore, when the scripts appeared on screen, they weren’t in any particular order of form or genre. Some had completely different genres. Both of which made it very difficult to compare.

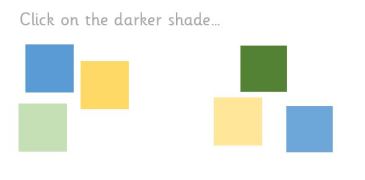

Rather than:

We were doing:

Staff had to scroll back and forth to try and make comparisons from the sets of work. I could see the look of frustration on their faces. It wasn’t as quick as it should be. There wasn’t the same buzz in the room we had had previously. There was less discussion. It was hard. Overall, it was a completely different experience for staff compared to when we had used CJ before, and not in a good way.

Recommendations

In future, I would suggest that the scripts from each school were organised on the screen so that they appeared in a set order:

- script 1, diary entry

- script 2, newspaper report

- script 3, story

This would make judging them through comparison much easier.

Another thought is to hold separate sessions so that genres/forms are ranked individually. So session 1: diaries; session 2: newspapers and session 3: stories. The ranks could then be averaged out to show the mean rank. I’m not amazing with data and there is probably a huge flaw in doing this but just putting it out there!

Final thought

I still think CJ is good and it’s still in its (primary writing assessment) infancy. With more consideration it could be great. But, please, let’s not try and compare apples and oranges.

An interesting read, but if we were comparing apples and oranges, surely we wouldn’t have reached high reliabilities as it is an impossible task? I suspect we were comparing different varieties of apples.

LikeLike

You can have high reliability but low validity?

LikeLike

Short answer, yes.

LikeLike

Take your point about the variety of apples. My concerns are more about task conditions than the act of using CJ. And we want CJ to be as efficient as possible and with a few tweaks about task conditions and presentation, I think it could really work.

LikeLike

It’s interesting that I didn’t find it like that at all. In fact, in some ways I found it harder to judge our own school’s writing, which was mostly made up of work from the same teaching sequences, than those from other schools.

I wonder if maybe you had a different question in your mind when you did it. If your thinking was “which of these two pieces of work is better?” I can see that it could sometime be difficult. My thinking was rather “which of these two writers is better?”, and I tried to use the breadth of evidence from that the pieces to decide that.

I would be very reluctant to see text types specified, as I think that (a) there are no agreed types, and (b) it is likely to further the narrowing of teaching.

I do think it’s very useful to have both fiction and non-fiction texts included. I could also imagine a national statutory model which included one more structured piece supported by other evidence, but I think that separate text-type judgements would be a mistake.

One challenge I did wonder about is how easy it would be to avoid reviewing my own class’s work at all. That I found tricky, as it’s hard to put your wider knowledge out of mind… Which rather highlights the issue with current TA approach!

LikeLike

I’m glad you found it a positive experience. It’s certainly miles better than APP but in our school, we found it better to moderate only one piece of writing at a time, particularly when making judgements against similar writers. Have you tried this in your school to compare?

With regards to the point you raised about having a different question in mind, the answer is no, we didn’t. Of course we were judging the quality of writing as a whole, but when we were faced with comparing a mixture of different genres and forms it made it harder. Some forms lend themselves to certain styles more than others. For example in certain portfolios we judged, the only piece of non-fiction was instructional writing. This type can be quite limiting in areas of writing compared to, say, a non chronological report. Similarly, I would argue that most children would be more likely to use bullet points or numbered lists in instructional writing but less so in non-chronological reports. By setting forms, comparisons are fairer. As well as this, most portfolios had 2 fiction and one non-fiction text type – not all, though…

As far as specifying text types for end of key stage tests, I would like to see a return of strict testing conditions whereby children are given a prompt on the day(s). Like in previous years, this could be done on several different pieces resulting in an overall grade. It may not be what many teachers would choose, but it would certainly help to eliminate much of the gaming that goes on with current teacher assessment. Also, this way, there would be no narrowing of teaching. CJ might provide a high reliability score, but if what we are judging isn’t a true reflection of what the children can write independently, then we are kidding ourselves as a profession.

LikeLike

I fear that moderating only a single piece of work at a time takes us to the very worst of the approach of the old writing test. It is, inevitably, easier if what you are looking for is whether or not they’ve used bullet points, but I’m not persuaded that that’s such a valuable marker of high quality writing.

I think I’d like to see a middle ground. I agree that we need to see some much greater clarity and expectation about the circumstances under which children write, as the current system fails us all. I think I’d be happier with a more mixed system, though, than setting specific tasks – it’s so hard to provide a level playing field in such cases. Even the simplest of writing tasks under the old test system would have favoured some pupils over others, and the topics inevitably have to be so narrow as to invite tedious writing. I think there is something more creative that could be done – but it won’t be easy, I’m sure!

LikeLike